Flexi is a High Perfomance Open Source CFD that scales to hundreds of thousands of processor cores. In this tutorial, we show, how flexi is instrumented and optimized with READEX, which results in energy savings of 11 %.

Prerequisite

This tutorial assumes that you installed the READEX components and have their binaries available at $PATH. Furthermore, we use the Intel Compiler Suite and Intel MPI during this test case. You might use other compilers and MPI versions. We used the input files given here: flexi_input.zip.

Preparation

First we clone flexi and create a build directory:

rschoene@tauruslogin4:/lustre/ssd/rschoene> git clone https://github.com/flexi-framework/flexi.git

...

rschoene@tauruslogin4:/lustre/ssd/rschoene> cd flexi

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi> mkdir build

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi> cd build/

Now, we build flexi once without instrumentation. This will trigger a build of the needed HDF5 library, which we do not want to instrument. The first step prepares compilation:

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> cmake ..

-- The C compiler identification is Intel 17.0.2.20170213

-- The CXX compiler identification is Intel 17.0.2.20170213

-- Check for working C compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/icc

-- Check for working C compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/icc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features - done

-- Check for working CXX compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/icpc

-- Check for working CXX compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/icpc -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- The Fortran compiler identification is Intel 17.0.2.20170213

-- Check for working Fortran compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/ifort

-- Check for working Fortran compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/ifort -- works

-- Detecting Fortran compiler ABI info

-- Detecting Fortran compiler ABI info - done

-- Checking whether /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/ifort supports Fortran 90

-- Checking whether /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/ifort supports Fortran 90 -- yes

-- ...

The second step actually compiles the software. Please note that HDF5 seems to have problems with a parallel build process, so wie skip the -j flag of make. This process will take a while (several minutes)

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> make

Scanning dependencies of target HDF5

Scanning dependencies of target stacksizelib

Scanning dependencies of target userblocklib

Scanning dependencies of target userblocklib_dummy

[ 0%] Creating directories for 'HDF5'

[ 2%] Building C object CMakeFiles/userblocklib.dir/src/output/read_userblock.c.o

[ 2%] Building C object CMakeFiles/stacksizelib.dir/src/globals/stacksize.c.o

[ 2%] Building C object CMakeFiles/userblocklib_dummy.dir/src/output/read_userblock_dummy.c.o

[ 3%] Performing download step (git clone) for 'HDF5'

Klone nach 'HDF5' ...

[ 4%] Linking C static library lib/libuserblocklib_dummy.a

[ 5%] Linking C static library lib/libuserblocklib.a

[ 5%] Linking C static library lib/libstacksizelib.a

[ 5%] Built target stacksizelib

[ 5%] Built target userblocklib_dummy

[ 5%] Built target userblocklib

Hinweis: Checke 'hdf5-1_10_0-patch1' aus.

…

HEAD ist jetzt bei 77099ba3bc... [svn-r29954] */run*-ex.sh.in: Scripts to compile and run installed needed relative paths to the installed bin directory instead of the ablsolute path from $prefix (on the build machines).

[ 6%] No patch step for 'HDF5'

[ 6%] No update step for 'HDF5'

[ 7%] Performing configure step for 'HDF5'

...

After HDF5 is built, the building of flexi continues and we install it and make a copy of the original (non-instrumented) program for later comparison

...

[ 98%] Built target posti_preparerecordpoints

Scanning dependencies of target posti_evaluaterecordpoints

[ 99%] Building Fortran object CMakeFiles/posti_evaluaterecordpoints.dir/posti/recordpoints/evaluate/evaluaterecordpoints.f90.o

ifort: command line remark #10382: option '-xHOST' setting '-xCORE-AVX2'

[100%] Linking Fortran executable bin/posti_evaluaterecordpoints

ifort: command line remark #10382: option '-xHOST' setting '-xCORE-AVX2'

SUCCESS: POSTI_EVALUATERECORDPOINTS BUILD COMPLETE!

[100%] Built target posti_evaluaterecordpoints

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> make install

….

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> mv ../bin ../bin_clean

Now we delete the built flexi files, but not the HDF5 build and change the CMakeFile, since the unit tests are sequential, but we use mpicc as linker later. This would result in a Score-P error.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> rm -rf *

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> edit ../CMakeLists.txt

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> git diff

diff --git a/CMakeLists.txt b/CMakeLists.txt

index 3f4ba51..c74e254 100755

--- a/CMakeLists.txt

+++ b/CMakeLists.txt

@@ -643,9 +643,9 @@ ENDIF(CTAGS_PATH)

# =========================================================================

# Deactivate tests on hornet since no programs are allowed to run on frontend

-IF(ONHORNET GREATER -1)

- SET(FLEXI_UNITTESTS OFF CACHE BOOL "Enable unit tests after build" FORCE)

-ENDIF()

+# IF(ONHORNET GREATER -1)

+SET(FLEXI_UNITTESTS OFF CACHE BOOL "Enable unit tests after build" FORCE)

+# ENDIF()

IF(FLEXI_UNITTESTS)

INCLUDE(${CMAKE_CURRENT_SOURCE_DIR}/unitTests/CMakeLists.txt)

ENDIF()

First Compilation with Score-P and application of Autofilter

Now that everything is compared, we can compile everything with Score-P. We ignore the compiler errors and syntax errors.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> FC="scorep mpif90" LD="scorep mpicc" cmake ..

-- The C compiler identification is Intel 17.0.2.20170213

-- The CXX compiler identification is Intel 17.0.2.20170213

-- Check for working C compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/icc

-- Check for working C compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/icc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features - done

-- Check for working CXX compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/icpc

-- Check for working CXX compiler: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/bin/intel64/icpc -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- The Fortran compiler identification is Intel 17.0.2.20170213

-- Check for working Fortran compiler: /projects/p_readex/scorep/ci_TRY_READEX_online_access_call_tree_extensions_intelmpi2017.2.174_intel2017.2.174/bin/scorep

-- Check for working Fortran compiler: /projects/p_readex/scorep/ci_TRY_READEX_online_access_call_tree_extensions_intelmpi2017.2.174_intel2017.2.174/bin/scorep -- works

-- Detecting Fortran compiler ABI info

-- Detecting Fortran compiler ABI info - done

-- Checking whether /projects/p_readex/scorep/ci_TRY_READEX_online_access_call_tree_extensions_intelmpi2017.2.174_intel2017.2.174/bin/scorep supports Fortran 90

-- Checking whether /projects/p_readex/scorep/ci_TRY_READEX_online_access_call_tree_extensions_intelmpi2017.2.174_intel2017.2.174/bin/scorep supports Fortran 90 -- yes

-- Found HDF5 Libs: /lustre/ssd/rschoene/flexi/share/Intel-MPI/HDF5/build/lib/libhdf5_fortran.a/lustre/ssd/rschoene/flexi/share/Intel-MPI/HDF5/build/lib/libhdf5.a/usr/lib64/libz.so-ldl

-- Using BLAS/Lapack library

-- Looking for pthread.h

-- Looking for pthread.h - found

-- Looking for pthread_create

-- Looking for pthread_create - not found

-- Looking for pthread_create in pthreads

-- Looking for pthread_create in pthreads - not found

-- Looking for pthread_create in pthread

-- Looking for pthread_create in pthread - found

-- Found Threads: TRUE

-- Looking for Fortran sgemm

-- Looking for Fortran sgemm - found

-- A library with BLAS API found.

-- Looking for Fortran cheev

-- Looking for Fortran cheev - found

-- Found MPI_C: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/mpi/intel64/lib/libmpifort.so (found version "3.1")

-- Found MPI_CXX: /sw/global/compilers/intel/2017/compilers_and_libraries_2017.2.174/linux/mpi/intel64/lib/libmpicxx.so (found version "3.1")

-- Found MPI_Fortran: /projects/p_readex/scorep/ci_TRY_READEX_online_access_call_tree_extensions_intelmpi2017.2.174_intel2017.2.174/bin/scorep (found version "3.1")

-- Found MPI: TRUE (found version "3.1")

-- Building Flexi with MPI

-- MPI Compiler: /projects/p_readex/scorep/ci_TRY_READEX_online_access_call_tree_extensions_intelmpi2017.2.174_intel2017.2.174/bin/scorep

-- Posti: building visu tool.

-- Posti: building mergetimeaverages tool.

-- Posti: using prepare record points tool.

-- Posti: building evaluaterecordpoints tool.

-- Configuring done

-- Generating done

-- Build files have been written to: /lustre/ssd/rschoene/flexi/build

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> make -j

Scanning dependencies of target userblocklib_dummy

Scanning dependencies of target stacksizelib

Scanning dependencies of target userblocklib

Scanning dependencies of target flexilibF90

...

SUCCESS: FLEXI2VTK BUILD COMPLETE!

SUCCESS: FLEXI BUILD COMPLETE!

[100%] Built target flexi2vtk

[100%] Built target flexi

...

[100%] Built target posti_visu

We install the Score-P instrumented application

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> make install

[ 1%] Built target stacksizelib

[ 69%] Built target flexilibF90

[ 70%] Built target flexilib

[ 72%] Built target userblocklib_dummy

[ 74%] Built target userblocklib

[ 76%] Built target posti_evaluaterecordpoints

[ 83%] Built target preparerecordpointslibF90

[ 83%] Built target preparerecordpointslib

[ 85%] Built target posti_preparerecordpoints

[ 86%] Built target posti_mergetimeaverages

[ 95%] Built target visulibF90

[ 95%] Built target visulib

[ 96%] Built target flexi

[ 98%] Built target flexi2vtk

[100%] Built target posti_visu

Install the project...

-- Install configuration: "Release"

-- Installing: /lustre/ssd/rschoene/flexi/bin/flexi

-- Installing: /lustre/ssd/rschoene/flexi/bin/flexi2vtk

-- Installing: /lustre/ssd/rschoene/flexi/bin/posti_visu

-- Installing: /lustre/ssd/rschoene/flexi/bin/posti_mergetimeaverages

-- Installing: /lustre/ssd/rschoene/flexi/bin/posti_preparerecordpoints

-- Installing: /lustre/ssd/rschoene/flexi/bin/posti_evaluaterecordpoints

-- Installing: /lustre/ssd/rschoene/flexi/bin/configuration.cmake

-- Installing: /lustre/ssd/rschoene/flexi/bin/userblock.txt

Now we run our application for the first time. I set SCOREP_ENABLE_TRACING=true and SCOREP_TOTAL_MEMORY=2G to be able to show you a picture later. You do not have to do so (if you don’t want to have a trace that is). We switch to the test directory, and run flexi with a given input file. We use srun (instead of mpirun) since taurus uses the SLURM batch system, we use two compute nodes with 24 processor cores each.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> srun -n 48 --mem-per-cpu=6G -p haswell ../bin/flexi parameter_flexi_DG.ini

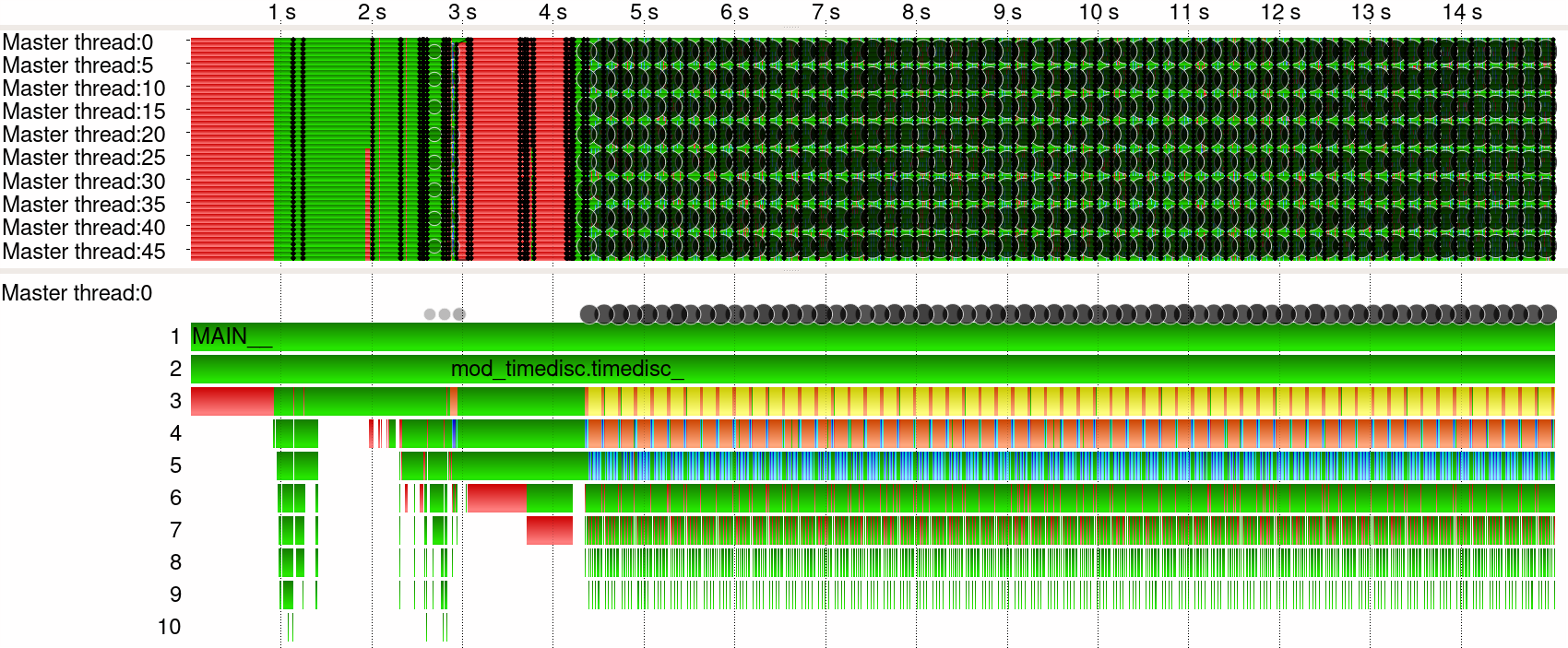

Here’s the resulting trace (located under /lustre/ssd/rschoene/flexi/test/scorep-20180706_0928_24716630515650636 in my case, yours will be somewhere else) . In the top, we see an overview of the application with 48 processes running. In the bottom we see the stack of the main process (MPI rank 0). After MPI initialization (until second 0.8), an initialization phase starts (until second 4.2). Afterwards, computation starts with the function mod_timedisc.timedisc_. The circles represent MPI communication. MPI regions are marked red. User application is green, I highlighted some of the regions with colors. More information can be found in the Vampir user guide.

This function calls a loop (which shall later be our Phase) that executes other shorter functions, which could be significant regions. These call very short insignificant unctions, which we will now filter using the scorep-autofilter tool, which is part of READEX’ PTF installation.

To create the filter file, I remove instrumentation of all functions that are shorter then 100 ms (can be changed with -t switch) and create an Intel filter file (since we use an Intel compiler). Please make sure to use the correct scorep folder. Yours will have a different timestamp.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> scorep-autofilter -i tcollect_filter scorep-20180706_0928_24716630515650636/profile.cubex

Then, I recompile flexi with the given filter file

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> cd ../build/

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> rm -rf *

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> cp ../test/tcollect_filter.filt ./

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> FC="scorep mpif90" LD="scorep mpicc" FFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" CFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" LDFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" CPPFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt"cmake ..

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> make -j

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> make install

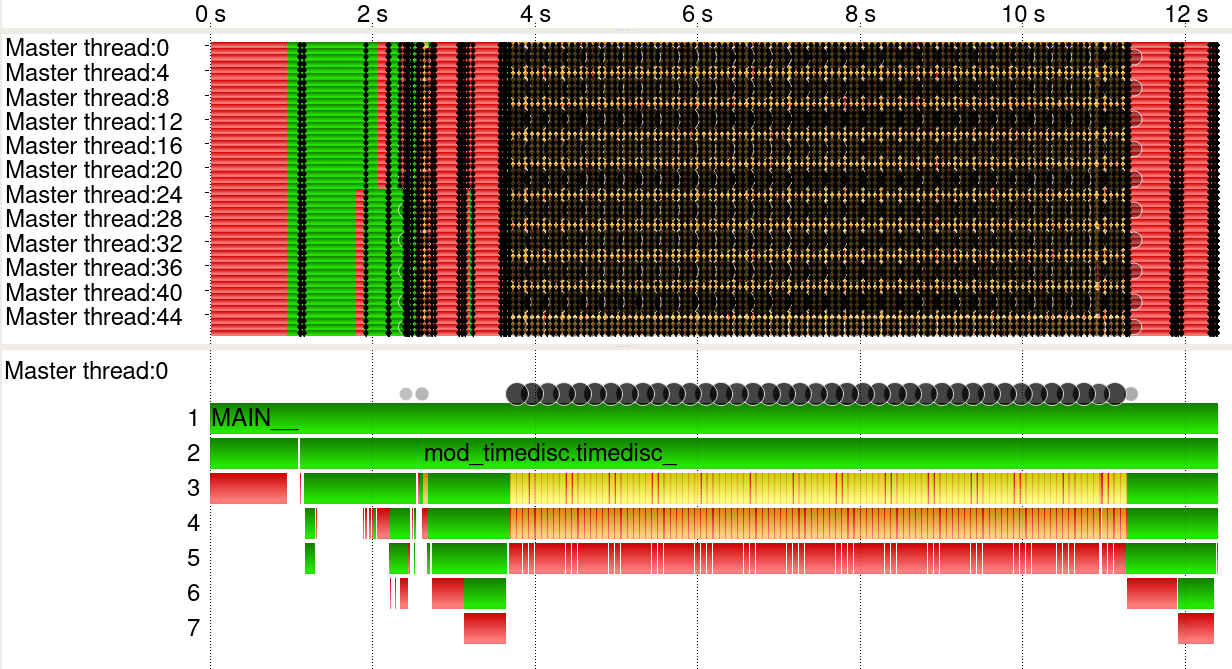

When I re-run the test, I can see that the filter file was actually applied:

This looks much better.

Phase Instrumentation and Application of Periscope

Phase Instrumentation

Now, we instrument the phase manually, since there is no region within the loop. I now add the definition of the phase region (SCOREP_USER_REGION_DEFINE) and mark beginning and end of the loop (SCOREP_USER_OA_PHASE_BEGIN, SCOREP_USER_OA_PHASE_END). Furthermore, I include the Fortran header file scorep/SCOREP_User.inc.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> edit ../src/timedisc/timedisc.f90

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> git diff ../src/timedisc/timedisc.f90

diff --git a/src/timedisc/timedisc.f90 b/src/timedisc/timedisc.f90

index d5ca96e..da6b543 100644

--- a/src/timedisc/timedisc.f90

+++ b/src/timedisc/timedisc.f90

@@ -11,6 +11,9 @@

!

! You should have received a copy of the GNU General Public License along with FLEXI. If not, see <http://www.gnu.org/licenses/>.

!=================================================================================================================================

+

+#include <scorep/SCOREP_User.inc>

+

#include "flexi.h"

!==================================================================================================================================

@@ -185,6 +188,8 @@ INTEGER :: TimeArray(8) !< Array for system ti

INTEGER :: errType,nCalcTimestep,writeCounter

LOGICAL :: doAnalyze,doFinalize

!==================================================================================================================================

+SCOREP_USER_REGION_DEFINE(phase)

+

SWRITE(UNIT_StdOut,'(132("-"))')

@@ -243,7 +248,6 @@ CALL Visualize(t,U)

! No computation needed if tEnd=tStart!

IF((t.GE.tEnd).OR.maxIter.EQ.0) RETURN

-

tStart = t

iter=0

iter_loc=0

@@ -280,6 +284,7 @@ SWRITE(UNIT_StdOut,*)'CALCULATION RUNNING...'

! Run computation

CalcTimeStart=FLEXITIME()

DO

+ SCOREP_USER_OA_PHASE_BEGIN(phase, "Loop-phase", 2)

CurrentStage=1

CALL DGTimeDerivative_weakForm(t)

IF(doCalcIndicator) CALL CalcIndicator(U,t)

@@ -387,6 +392,7 @@ DO

tAnalyze= MIN(tAnalyze+Analyze_dt, tEnd)

doAnalyze=.FALSE.

END IF

+ SCOREP_USER_OA_PHASE_END(phase)

IF(doFinalize) EXIT

END DO

Preparing Design Time Analysis (Compilation)

We recompile the application again, and now we enable the user instrumentation and the Online Access interface, that is needed by Periscope. We make a copy of the old flexi binaries for later usage with the READEX runtime library.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> cd ../build/

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> cp -r ../bin ../bin-rrl

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> rm -rf *

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> cp ../test/tcollect_filter.filt ./

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> FC="scorep --online-access --user --nomemory mpif90" LD="scorep --online-accessmpicc" FFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" CFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" LDFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" CPPFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" cmake ..

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> make -j install

Applying readex-dyn-detect and Creating readex_config.xml

Now, everything is ready for Design Time Analysis. First, I run the code to be used for readex-dyn-detect, afterwards, I run readex-dyn-detect to create a configuration file for Periscope.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> export SCOREP_PROFILING_FORMAT=cube_tuple

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> export SCOREP_METRIC_PAPI=PAPI_TOT_INS,PAPI_L3_TCM

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> srun -n 48 --mem-per-cpu=6G -p haswell ../bin/flexi parameter_flexi_DG.ini

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> readex-dyn-detect -p Loop-phase scorep-20180809_1409_13924252150697532/profile.cubex

Reading scorep-20180809_1409_13924252150697532/profile.cubex...

Done.

Granularity threshold: 0.1

89candidate_nodes

Phase node Loop-phase found in candidate regions. It has 4 children.

89 coarse_nodes

Phase node Loop-phase

There is a phase region

Granularity of Loop-phase: 1.60044

NAME: mod_dg.dgtimederivative_weakform_

Granularity of mod_timedisc.timestepbylserkw2_: 1.2536

Granularity of mod_dg.dgtimederivative_weakform_: 0.291937

Granularity of mod_hdf5_output.writestate_: 1.98935

Granularity of mod_hdf5_output.generatefileskeleton_: 0.0713945

NAME: mod_hdf5_output.gatheredwritearray_

Granularity of mod_hdf5_output.writeadditionalelemdata_: 0.238243

NAME: mod_io_hdf5.opendatafile_

NAME: mod_io_hdf5.closedatafile_

Granularity of mod_hdf5_output.gatheredwritearray_: 0.945916

Granularity of mod_io_hdf5.opendatafile_: 0.0185413

Granularity of mod_hdf5_output.writearray_: 0.775095

Granularity of mod_io_hdf5.closedatafile_: 0.0519488

Granularity of mod_hdf5_output.markwritesuccessfull_: 0.0149707

Granularity of mod_analyze.analyze_: 0.513116

Granularity of mod_analyze.calcerrornorms_: 0.512436

Candidate regions are:

Loop-phase

mod_timedisc.timestepbylserkw2_

mod_dg.dgtimederivative_weakform_

mod_hdf5_output.writestate_

mod_hdf5_output.writeadditionalelemdata_

mod_hdf5_output.gatheredwritearray_

mod_hdf5_output.writearray_

mod_analyze.analyze_

mod_analyze.calcerrornorms_

Call node: mod_dg.dgtimederivative_weakform_ Inclusive Time 5601.47

Parent node: mod_timedisc.timestepbylserkw2_ Exclusive Time 412.019

Call node: mod_hdf5_output.writearray_ Inclusive Time 3.98817

Parent node: mod_hdf5_output.gatheredwritearray_ Exclusive Time 0.00285408

Call node: mod_analyze.calcerrornorms_ Inclusive Time 24.3139

Parent node: mod_analyze.analyze_ Exclusive Time 0.678844

Significant regions are:

mod_analyze.calcerrornorms_

mod_dg.dgtimederivative_weakform_

mod_hdf5_output.writearray_

Significant region information

==============================

Region name Min(t) Max(t) Time Time Dev.(%Reg) Ops/L3miss Weight(%Phase)

mod_dg.dgtimederivative_w 0.281 0.314 116.910 0.0 0 73

mod_hdf5_output.writearra 0.073 0.073 0.073 0.0 66960 0

mod_analyze.calcerrornorm 0.512 0.512 0.512 0.0 30324 0

Phase information

=================

Min Max Mean Time Dev.(% Phase) Dyn.(% Phase)

1.55337 4.27932 1.60044 160.044 0 170.325

threshold time variation (percent of mean region time): 10.000000

threshold compute intensity deviation (#ops/L3 miss): 10.000000

threshold region importance (percent of phase exec. time): 10.000000

I now change the configuration file according to the available frequencies. The file /sys/devices/system/cpu/cpu0/cpufreq/scaling_available_frequencies on a compute node shows that the following frequencies are available. It prints: 2501000 2500000 2400000 2300000 2200000 2100000 2000000 1900000 1800000 1700000 1600000 1500000 1400000 1300000 1200000

I want to check for frequencies between 1.3 and 2.5 GHz with an increment of 200 MHz. I cannot use concurrency throttling, since there is no thread parallelization. I cannot use uncore frequency scaling since multiple MPI ranks share a single uncore. Therefore, I change the file readex_config.xml accordingly:

...

tuningParameter>

<frequency>

<min_freq>1300</min_freq>

<max_freq>2500</max_freq>

<freq_step>200</freq_step>

<default>2500</default>

</frequency>

...

Applying Periscope Tuning Framework and Creating the tuning model

Now, we have a configuration file that we can use with Periscope to analyze the program. We do so by applying the Periscope frontend to the application using the SLURM periscope starter. To do so, we use a SLURM batch file that looks like the following. First, we define some SLURM parameters. Look at the SLURM documentation to learn more. Then, we setup the READEX Runtime Library and the energy monitoring plugin. There’s one for HDEEM and one for RAPL and APM. Here, HDEEM is used. Last, we start Periscope.

#!/bin/sh

#SBATCH --time=0:10:00 # walltime

#SBATCH --nodes=3 # number of nodes requested; 1 for PTF and remaining for application run

#SBATCH --tasks-per-node=24 # number of processes per node for application run

#SBATCH --cpus-per-task=1

#SBATCH --exclusive

#SBATCH --partition=haswell64

#SBATCH --mem-per-cpu=2500M # memory per CPU core

#SBATCH -J "flexi_PTF" # job name

#SBATCH --reservation=READEX

#SBATCH -A p_readex

# loading READEX environment

module purge

module use /projects/p_readex/modules

module load readex/ci_readex_intelmpi2017.2.174_intel2017.2.174

# set-up Score-P

export SCOREP_SUBSTRATE_PLUGINS=rrl

export SCOREP_RRL_PLUGINS=cpu_freq_plugin,uncore_freq_plugin

export SCOREP_RRL_VERBOSE="WARN"

# set-up energy measuremenent

module load scorep-hdeem/sync-intelmpi-intel2017

export SCOREP_METRIC_PLUGINS=hdeem_sync_plugin

export SCOREP_METRIC_PLUGINS_SEP=";"

export SCOREP_METRIC_HDEEM_SYNC_PLUGIN_CONNECTION="INBAND"

export SCOREP_METRIC_HDEEM_SYNC_PLUGIN_VERBOSE="WARN"

export SCOREP_METRIC_HDEEM_SYNC_PLUGIN_STATS_TIMEOUT_MS=1000

#lower instrumentation overhead

export SCOREP_MPI_ENABLE_GROUPS=ENV

# go to experiment directory

cd /lustre/ssd/rschoene/flexi/test

# start periscope

psc_frontend --phase="Loop-phase" --apprun="../bin_instr_oa/flexi parameter_flexi_DG.ini" --mpinumprocs=48 --ompnumthreads=1 --tune=readex_intraphase --config-file=readex_config.xml

Applying the Tuning Model

From Periscope, we got a tuning model (tuning_model.json) that we can apply. But first, we get rid of the Online Access Instrumentaion. We therefore re-compile flexi without the online-access interface attached.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> cd ../build/

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> rm -rf *

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> cp ../test/tcollect_filter.filt ./

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> FC="scorep --user --nomemory mpif90" LD="scorep --user --nomemory mpicc" FFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" CFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" LDFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt" CPPFLAGS="-tcollect-filter `pwd`/tcollect_filter.filt"cmake ..

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/build> make -j install

We then compare the default and the optimized version with the following batch script

#SBATCH --time=0:10:00 # walltime

#SBATCH --nodes=2 # number of nodes requested for application run

#SBATCH --tasks-per-node=24 # number of processes per node for application run

#SBATCH --cpus-per-task=1

#SBATCH --exclusive

#SBATCH --partition=haswell

#SBATCH --mem-per-cpu=2500M # memory per CPU core

#SBATCH -J "flexi_RRL" # job name

#SBATCH -A p_readex

#SBATCH --reservation=READEX

# loading READEX environment

module purge

module use /projects/p_readex/modules

module load readex/ci_readex_intelmpi2017.2.174_intel2017.2.174

# set-up Score-P

export SCOREP_SUBSTRATE_PLUGINS=rrl

export SCOREP_RRL_PLUGINS=cpu_freq_plugin,uncore_freq_plugin

export SCOREP_RRL_VERBOSE="DEBUG"

export SCOREP_TUNING_CPU_FREQ_PLUGIN_VERBOSE="DEBUG"

export SCOREP_TUNING_UNCORE_FREQ_PLUGIN_VERBOSE="DEBUG"

# Set-up RRL

export SCOREP_RRL_TMM_PATH="./tuning_model.json"

# Disable Profiling

export SCOREP_ENABLE_PROFILING=false

# Lower MPI overhead

export SCOREP_MPI_ENABLE_GROUPS=ENV

# go to experiment directory

cd /lustre/ssd/rschoene/flexi/test

# run optimized

../bin/flexi parameter_flexi_DG.ini

# run default

../bin_clean/flexi parameter_flexi_DG.ini

Results

Now we can use the SLURM accounting tool sacct to gather the energy values gathered with HDEEM:

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> sacct --format JobID,ConsumedEnergy -j 17271329

JobID ConsumedEnergy

------------ --------------

17271329

17271329.ba+ 99.09K

17271329.0 92.11K

17271329.1 103.48K

Here we see a saving in energy consumption of 11 % (92.11 kJ in comparison to 103.48 kJ). When comparing RAPL values (sum of package and DRAM of all used processors), the savings are about 16 % (64.3 kJ vs 76.6 kJ).

When scaling up input size and number of processors (e.g., using 8x8x16 elements on two nodes, i.e., doubling data and processor cores), the result stays the same. Here 12 % energy are saved. This experiment can be repeated with using parameter_hopr_unstr_large input to create a new HDF5 file for flexi to use.

rschoene@tauruslogin4:/lustre/ssd/rschoene/flexi/test> sacct --format JobID,ConsumedEnergy -j 17272342

JobID ConsumedEnergy

------------ --------------

17272342

17272342.ba+ 99.80K

17272342.0 183.28K

17272342.1 207.63K